Introduction

Computational infrastructure evolved dramatically over the past few decades, moving away from proprietary on-premises systems and embracing the advent of cloud computing. This paradigm shift has enabled more scalable and flexible resource management for enterprises across the globe in a wide variety of industries, also reducing capital expenditure as a result.

However, the centralization of cloud services introduced challenges like vendor lock-in or data sovereignty issues. Recently, Decentralized Physical Infrastructure Networks (DePIN) emerged as a solution. By distributing computing and storage across independent nodes, they challenge traditional cloud providers with a more resilient, cost-effective, and censorship-resistance business model.

Key Takeaways

- Evolution of IT Infrastructure: The shift from on-premises systems to cloud computing revolutionized resource management, reducing costs and enabling scalability.

- Limitations of Centralized Cloud Providers: Issues like vendor lock-in or data sovereignty highlight the drawbacks of over relying on centralized cloud services.

- Introduction of DePIN: Decentralized Physical Infrastructure Networks (DePIN) offer a new model, distributing resources across independently operated nodes, and addressing the cloud’s centralization issues.

- DePIN Benefits: DePIN provides a resilient, cost-effective, and secure infrastructure model, reducing reliance on centralized providers and lowering operational costs (similar to crowdsourcing) by aggregating idle resources.

- Economic Impact: DePIN taps into underutilized global resources, incentivizing participants through token rewards, making infrastructure more accessible and democratized.

- Cloud Adoption Trends: Cloud adoption continues to grow, with major cloud providers like AWS, Azure, and Google dominating the market but facing emerging competition from decentralized networks that are starting to threaten their respective monopolies.

- GPU’s Role in AI: GPUs are critical in AI development, driving the demand for decentralized cloud solutions like DePIN to provide scalable and affordable GPU resources.

- Challenges for DePIN: DePIN faces hurdles in achieving demand-side traction, trust, and reliability compared to established cloud providers, alongside managing token price volatility.

- Market Opportunity: For DePIN to succeed, balancing supply and demand, ensuring operational reliability, and fostering user trust are essential.

Reducing Capital Expenditure Through Decentralization

Traditionally, enterprises had to invest heavily in physical hardware, which was costly to purchase, maintain, and scale, particularly for smaller businesses. In contrast, the decentralized nature of DePIN reduces dependence on centralized intermediaries, aligning incentives and minimizing bureaucratic overhead by using blockchains as a single source of truth.

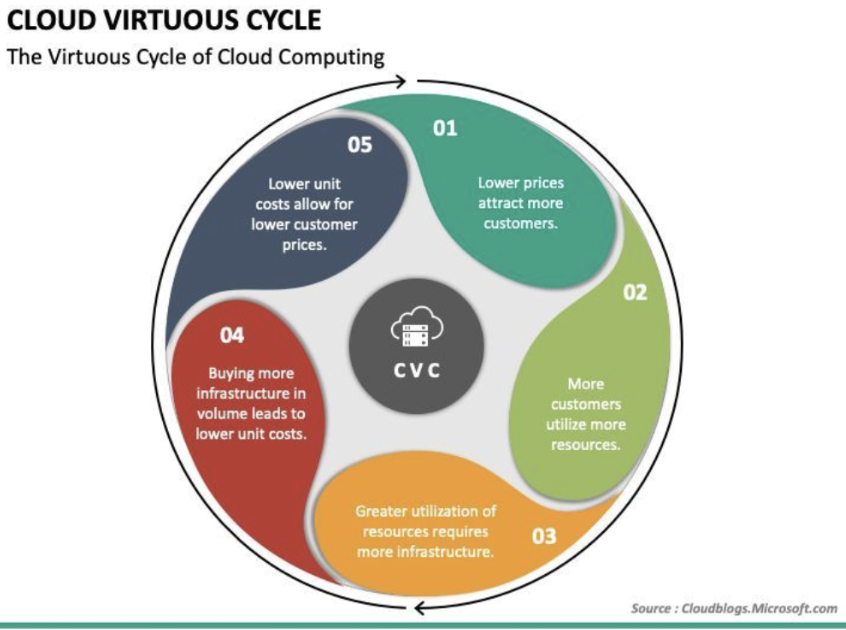

Figure 1: The Virtuous Cycle of Public Cloud

Source – Microsoft

The rise of cloud computing marked a shift from capital expenditure (CapEx) to operational expenditure (OpEx), democratizing access to computing resources and enabling rapid scaling for new businesses. This shift created a virtuous cycle for cloud providers, allowing them to reinvest in infrastructure, expand services, and attract more customers. As more enterprises migrated to the cloud, the demand for cloud services started to increase exponentially as a result of network effects. Today, nearly two decades after the launch of Amazon Web Services (AWS), we are still witnessing the long tail of cloud adoption, with many organizations continuing to transition to cloud-based architectures.

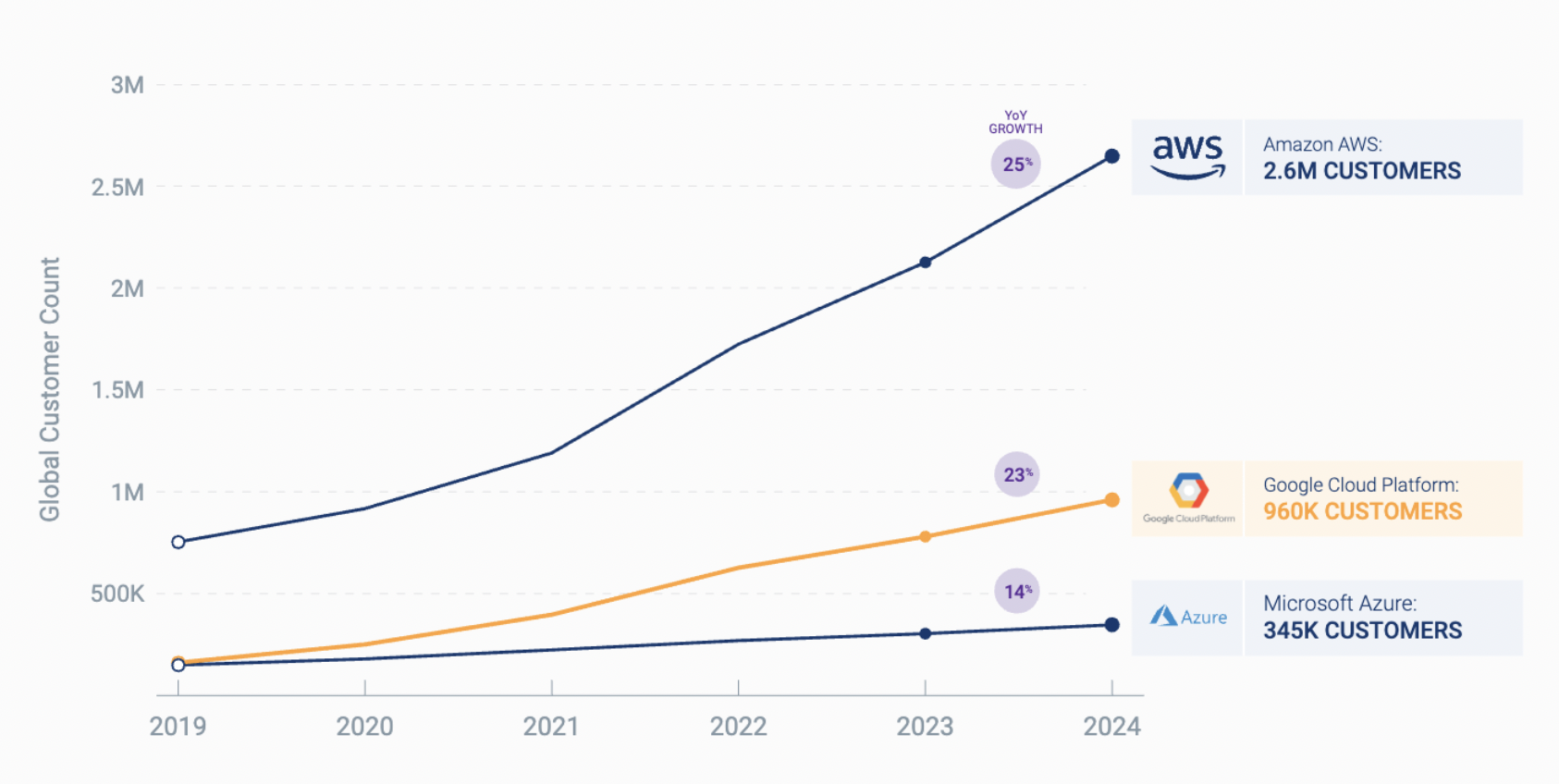

Figure 2: Growth In The Customer Base For Major Cloud Service Providers

Source: Holori

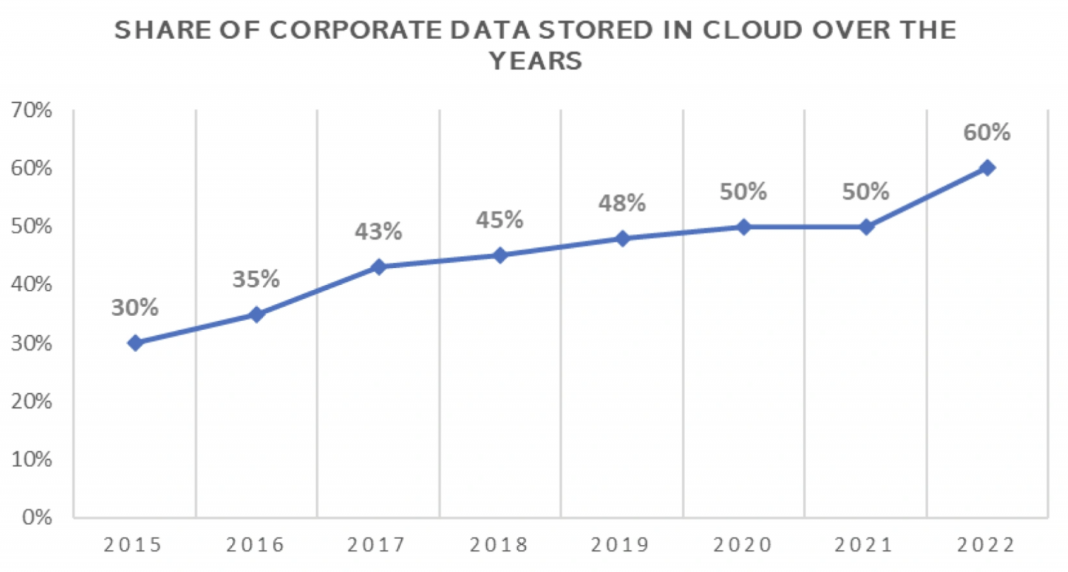

Figure 3: Growth In The Share of Corporate Data Outsourced To Cloud Infrastructures

Source: Edge Delta – The Number of Corporate Data stored within Cloud Infrastructures has more than doubled since 2015.

However, cloud providers introduce a dependency and high-trust environment on their moat. Compare that to DePIN, which leverages underutilized global resources by aggregating them and making them accessible through peer-to-peer networks with embedded marketplaces, reducing the need for significant upfront capital expenditures and lowering costs. By incentivizing participation with network-issued tokens, DePIN transforms users into stakeholders, driving network growth. Suppliers can meet demand in this open marketplace, with participants capturing a share of the value they provide and benefiting themselves from the upside of network effects.

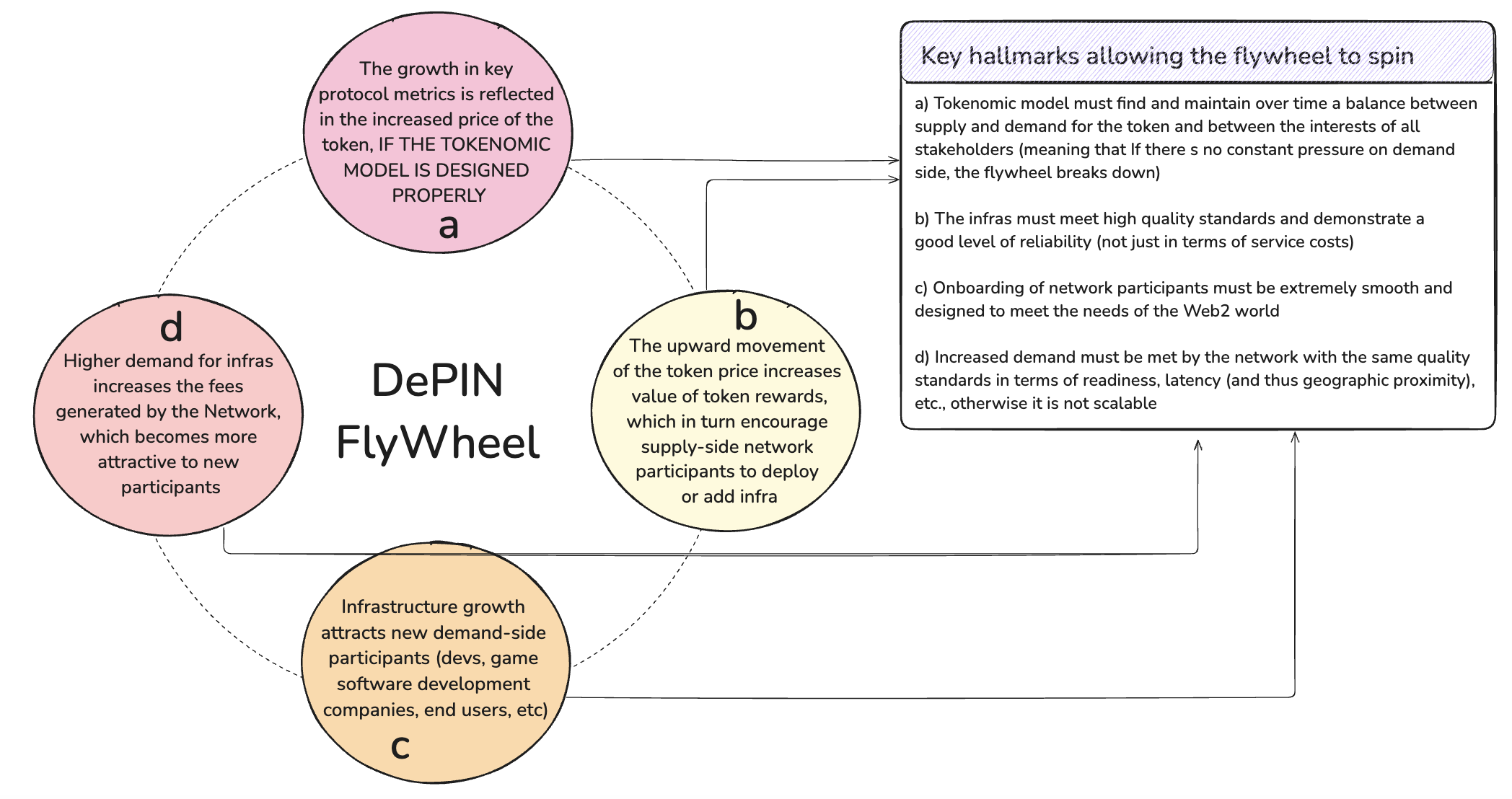

Figure 4: Decentralized Physical Infrastructure Networks

Source: Revelo Intel

State of The Art of Cloud Tech: Key Players, Numbers Involved

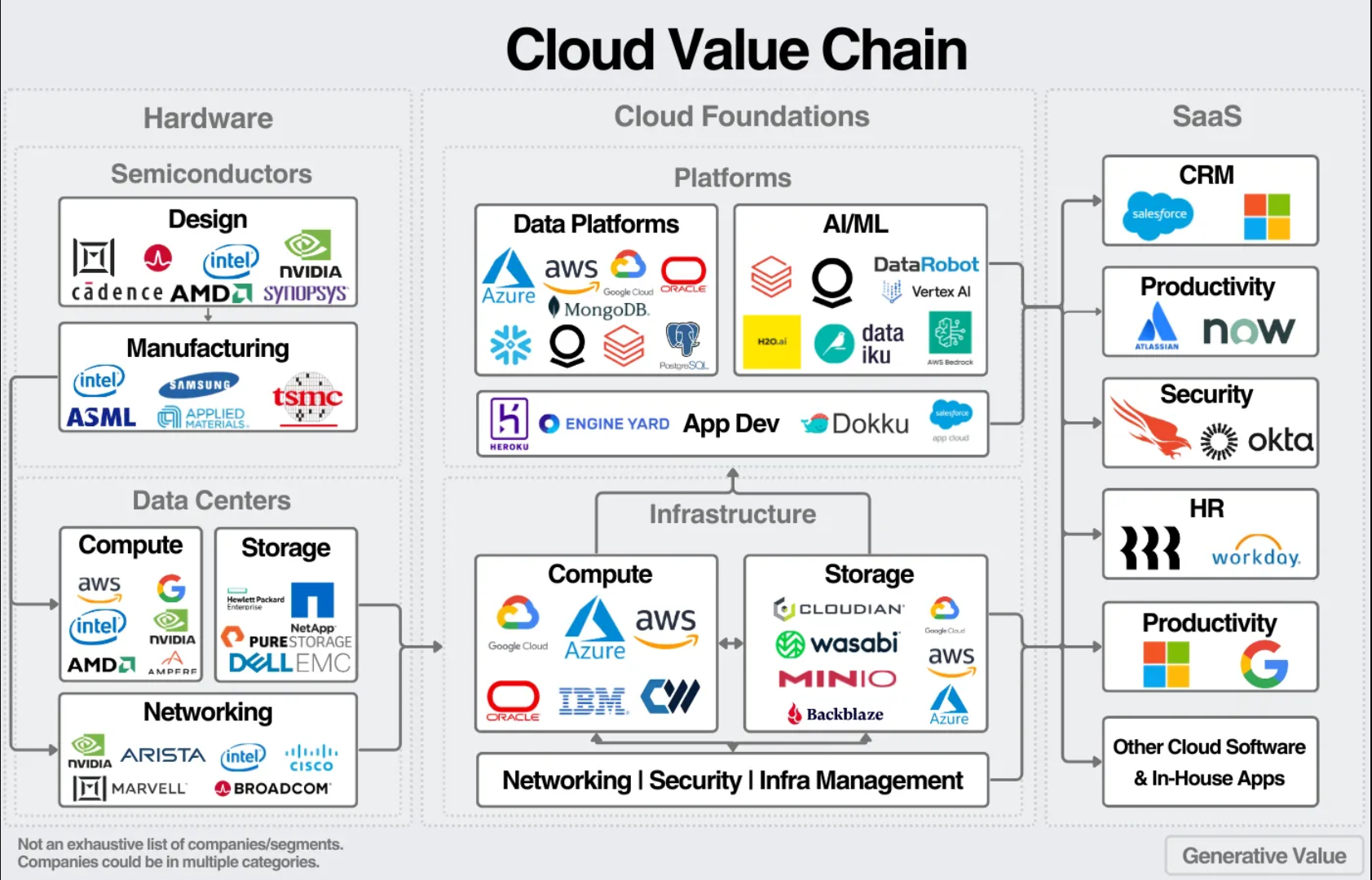

The cloud ecosystem is divided into three main segments:

| Hardware | Cloud Infra and Platforms | Cloud Software |

| Semiconductors and data centers acting as physical foundational layers of the Cloud Service. | Virtualized layers that manage computing, storage, and networking, allowing developers to build and deploy applications in the cloud. | Applications built on top of cloud infra and platforms, often delivered as Software as a Service (SaaS). |

Figure 5: The Infrastructure Layers of the Cloud

Source: Generative Value – Cloud Infrastructure Layers

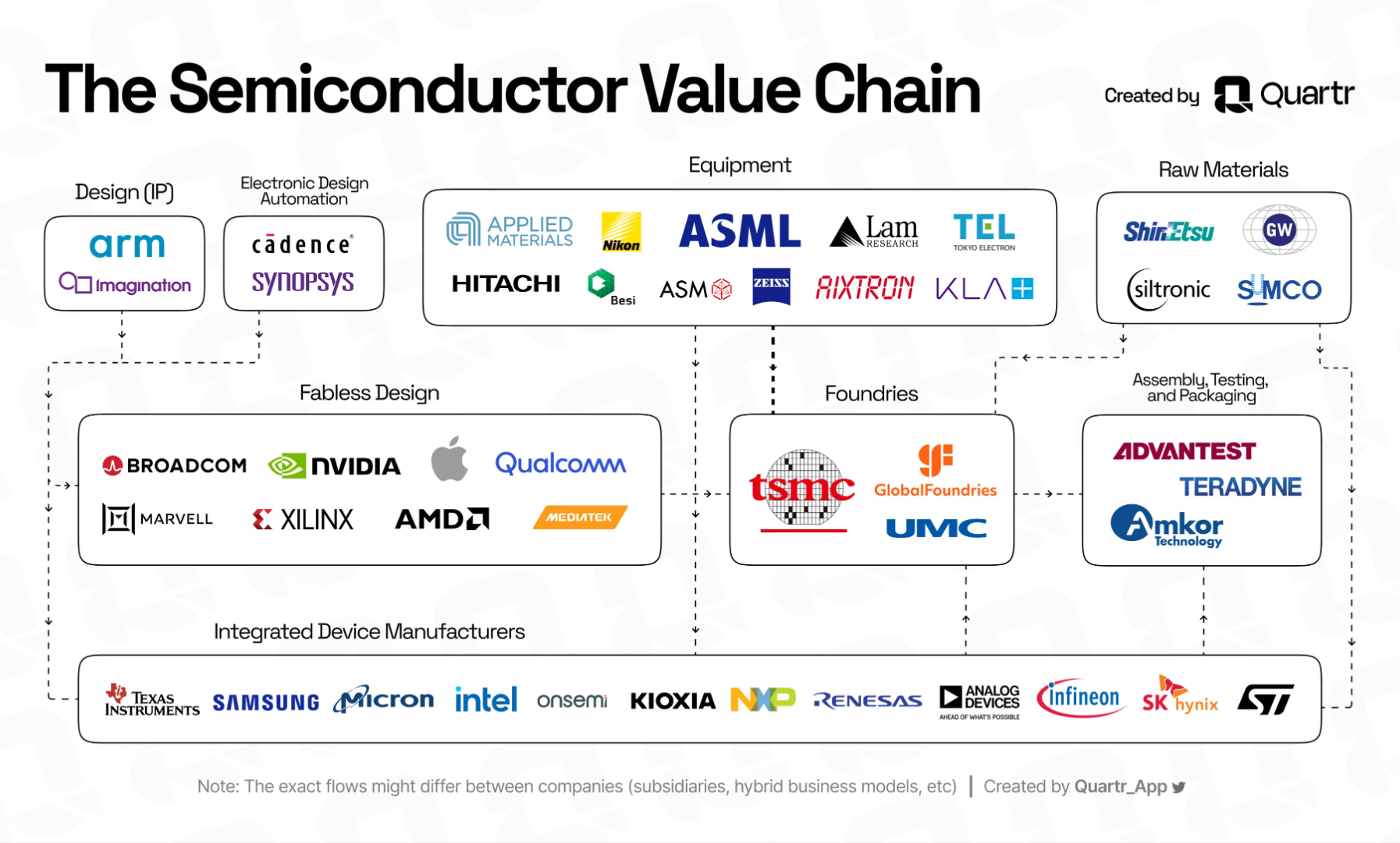

Semiconductor Industry:

The semiconductor industry is divided into design and manufacturing. Fabless firms design semiconductors but outsource production to foundries, while Integrated Device Manufacturers (IDMs) manage both design and production. Key players in design use Electronic Design Automation (EDA) tools like those from Synopsys, Cadence, and Siemens EDA. The industry is dominated by major players such as TSMC and Samsung, particularly in advanced manufacturing technologies like 3nm nodes. This market is highly consolidated, forming a de facto oligopoly.

Figure 6: How The Semiconductor Industry is Structured

Source: Quatr, Understanding the Semiconductor Value chain

Cloud Infrastructure:

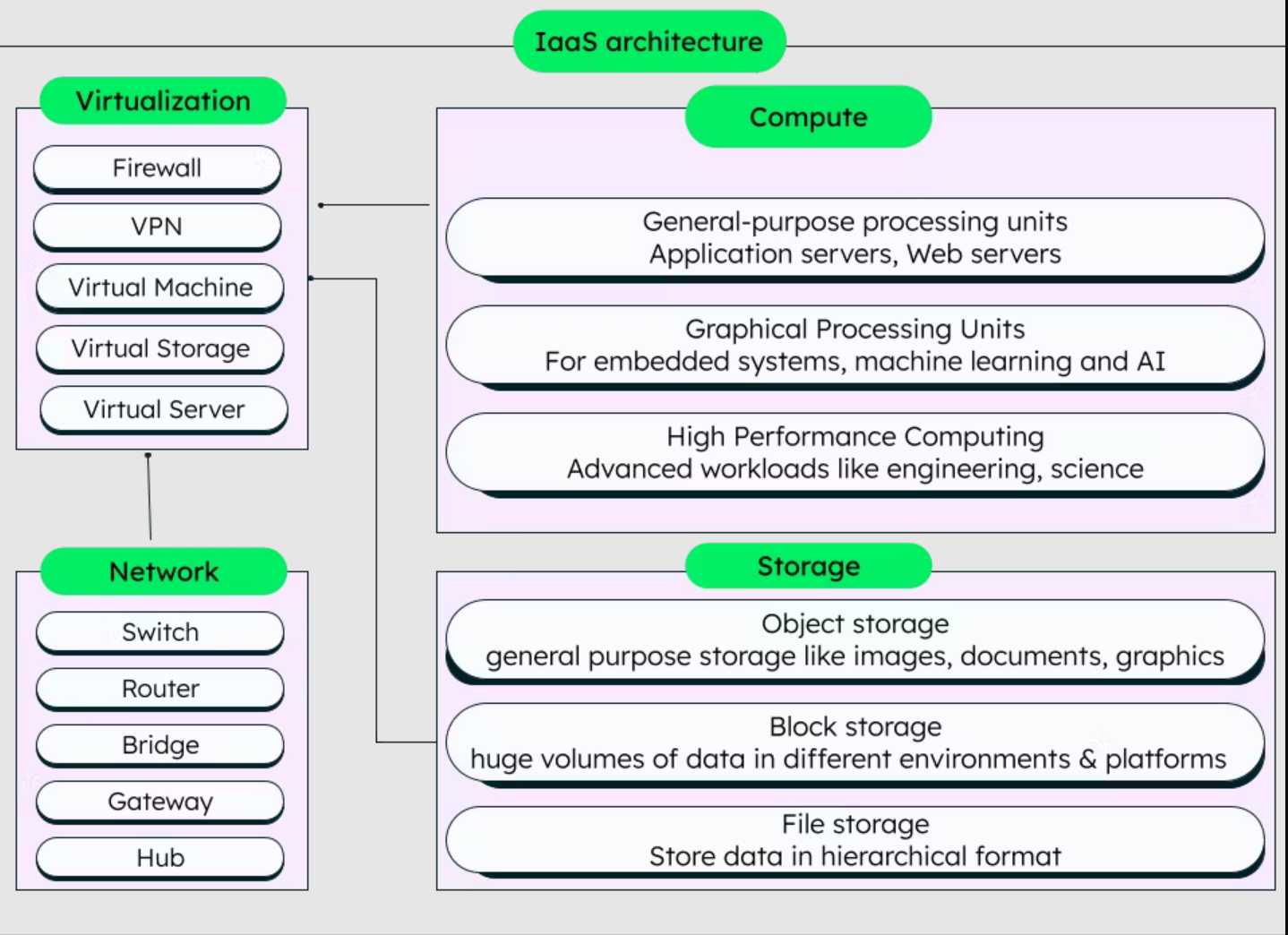

Cloud infrastructure virtualizes physical hardware, making it accessible as services over the internet. Key technologies include virtualization, containers, and various storage solutions. Virtualization allows multiple virtual machines to run on a single server, while containers offer a more efficient, lightweight alternative. Storage is categorized into object, file, and block storage, each suited to different use cases.

Figure 7: IaaS (Infrastructure as a Service) Key Components

Source: MongoDB, IaaS in Cloud Computing

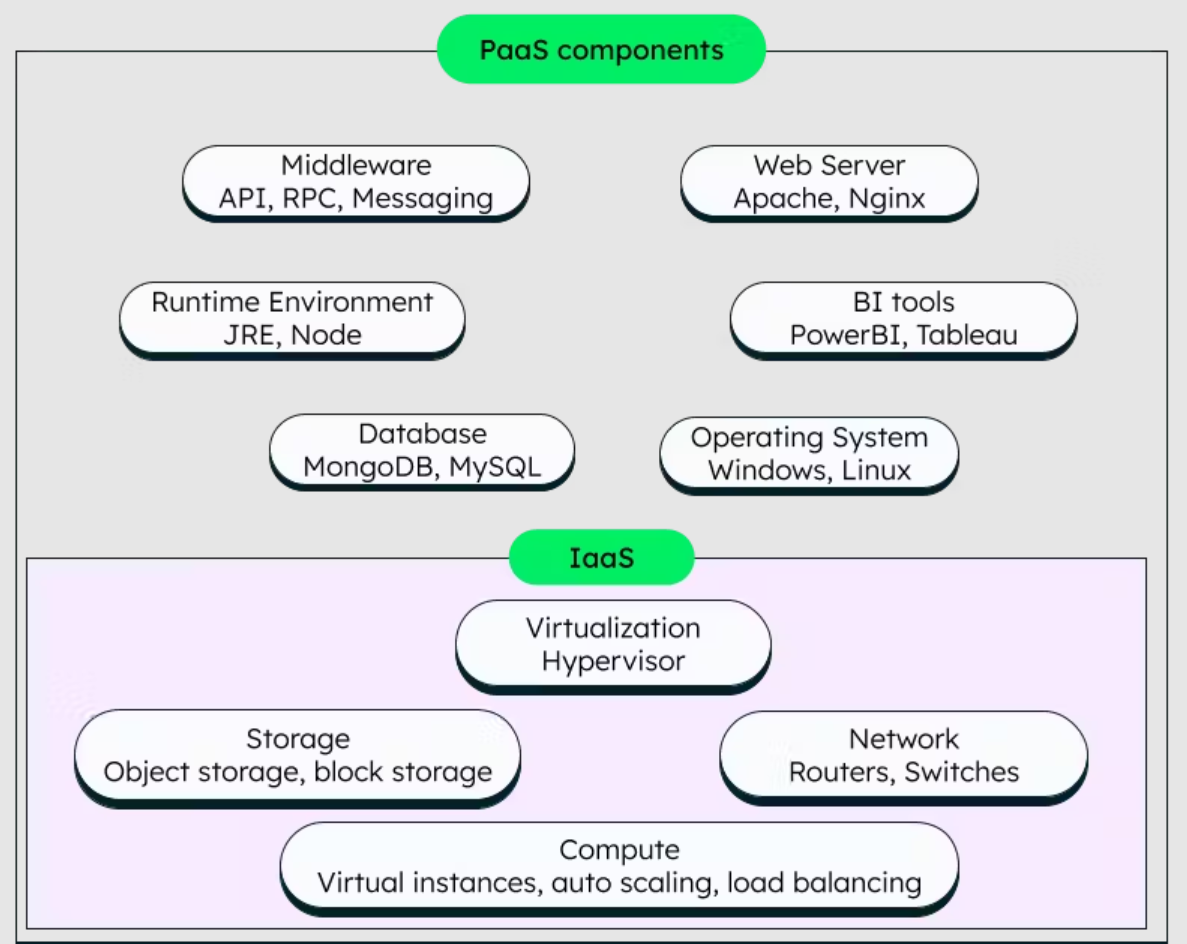

Cloud platforms like Snowflake and Kubernetes abstract infrastructure management, focusing on application development. The development environment offered to devs is comprehensive and includes hardware, routers, operating system, runtime environment, middleware, database, web server, etc.

Figure 8: PaaS (Platform as a Service) Key Components

Source: MongoDB, PaaS explained

Cloud Software:

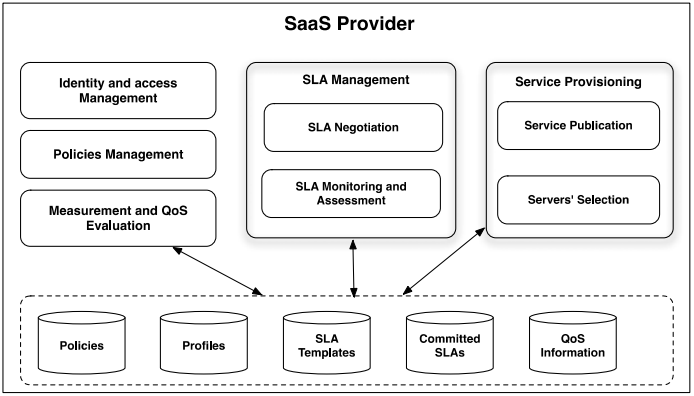

Cloud software, a.k.a. SaaS, is basically a cloud computing model where software apps are delivered over the internet on a subscription basis because it runs on top of cloud infra and platforms. Instead of purchasing and installing software on individual machines, users access the software via a web browser, which is hosted and maintained by a third-party provider.

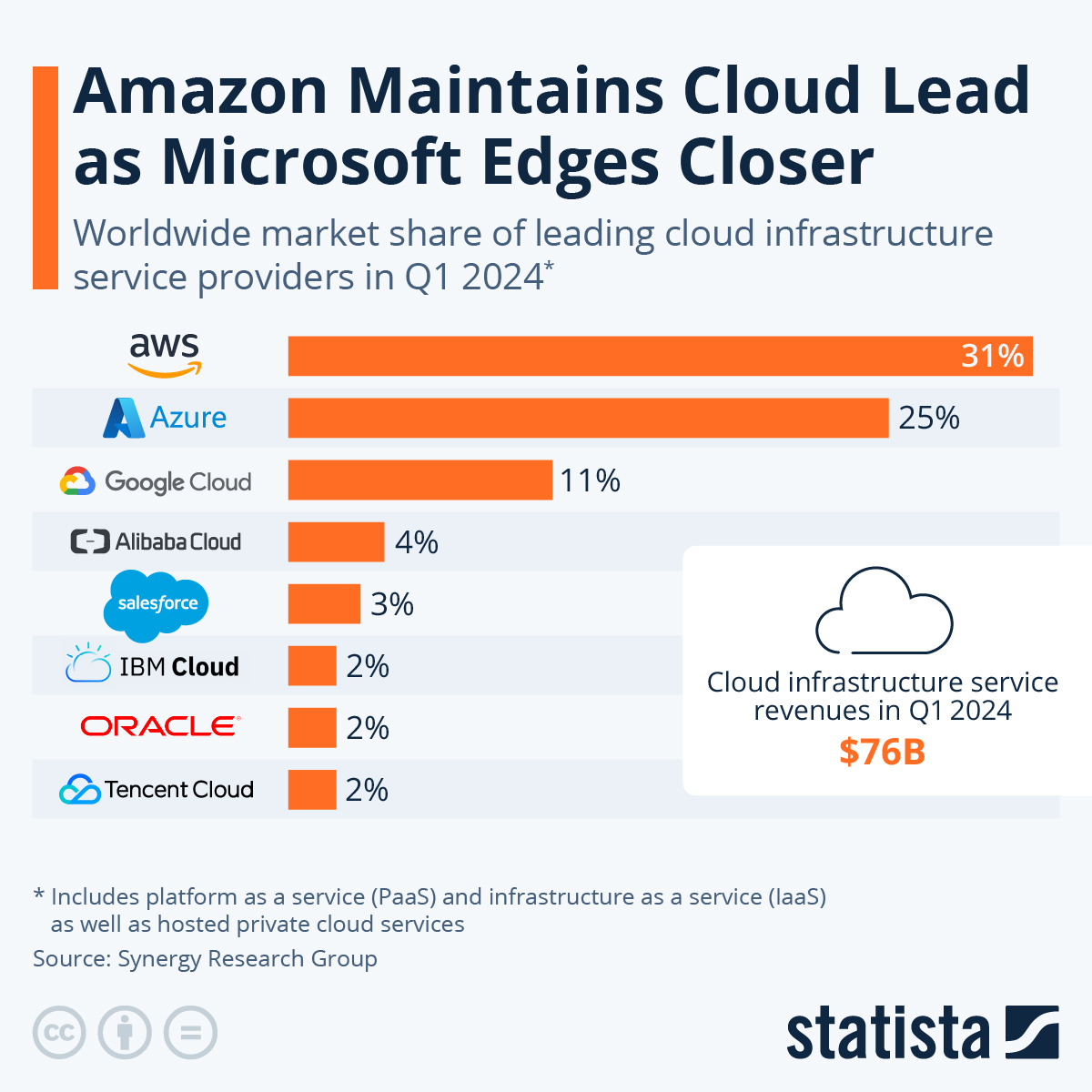

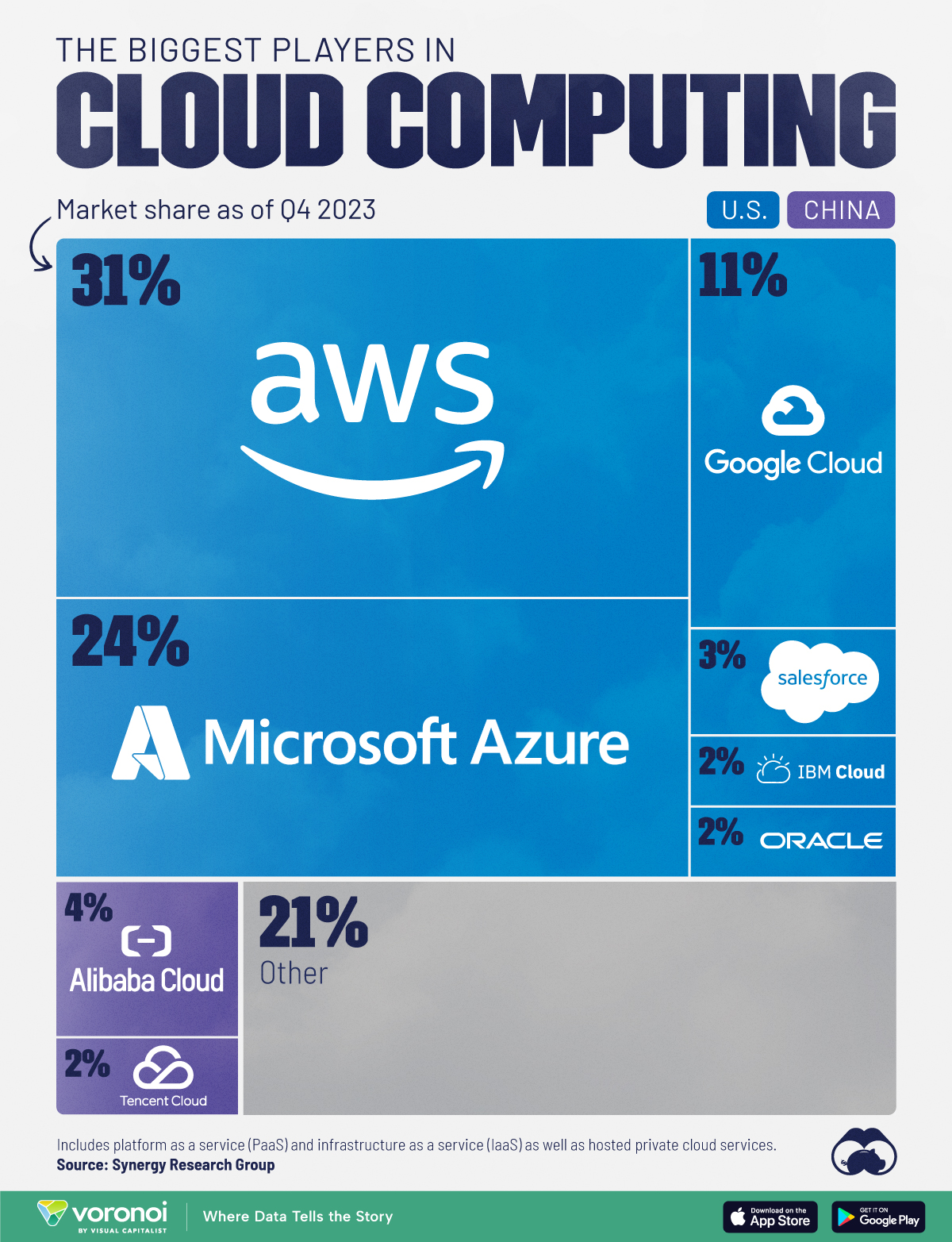

The market is led by hyperscalers, with AWS, Azure, and GCP capturing about 70% of the pie.

Figure 9: How SaaS is Structured

Source: Elarbi Badidi, A framework for SaaS Selection and Provisioning

Cloud environments include public, private, and hybrid models, each serving different needs. Public clouds offer scalable services at flexible pricing but may raise security and privacy concerns. Private clouds provide more control and security but at higher costs. Hybrid clouds combine both, offering flexibility but with added complexity.

Figure 10: Types of Clouds in Comparison

Source: BMC – Cloud Types

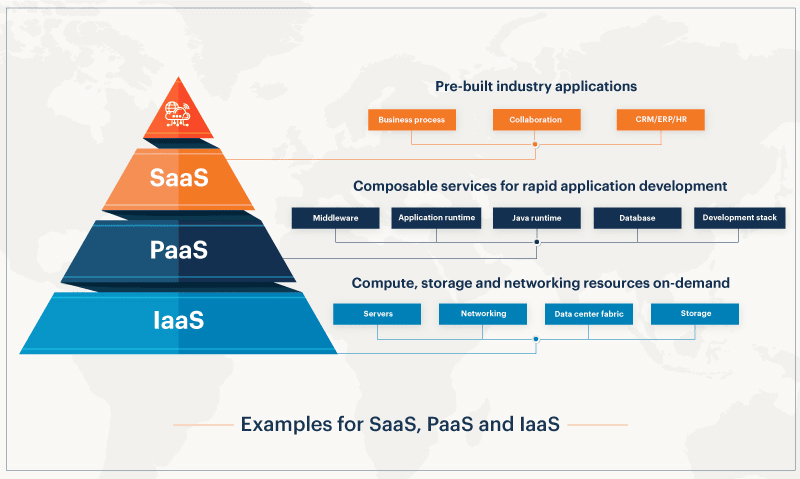

Within these deployment models, there are three main services (IaaS, PaaS, and SaaS), which are tailored to different consumer needs:

- Infrastructure-as-a-Service (IaaS): This model offers virtualized computing resources over the internet, such as virtual machines, storage, and networks. Customers can provision and manage these resources to build and scale their own IT environments, giving them control over the underlying hardware while avoiding the physical maintenance and overhead.

- Platform-as-a-Service (PaaS): This service provides a cloud-based environment with all the necessary tools and frameworks for developing, testing, deploying, and managing applications. It abstracts the infrastructure layer, enabling developers to focus on writing code without managing the underlying servers, storage, or networking.

- Software-as-a-Service (SaaS): This model delivers fully functional software applications over the internet, allowing users to access and utilize these applications without needing to manage the underlying hardware or software infrastructure.

Figure 11: Overview of The Main Services Available, Based On The Level of Complexity Outsourced

Source: Kinsta – Difference Between IaaS and PaaS

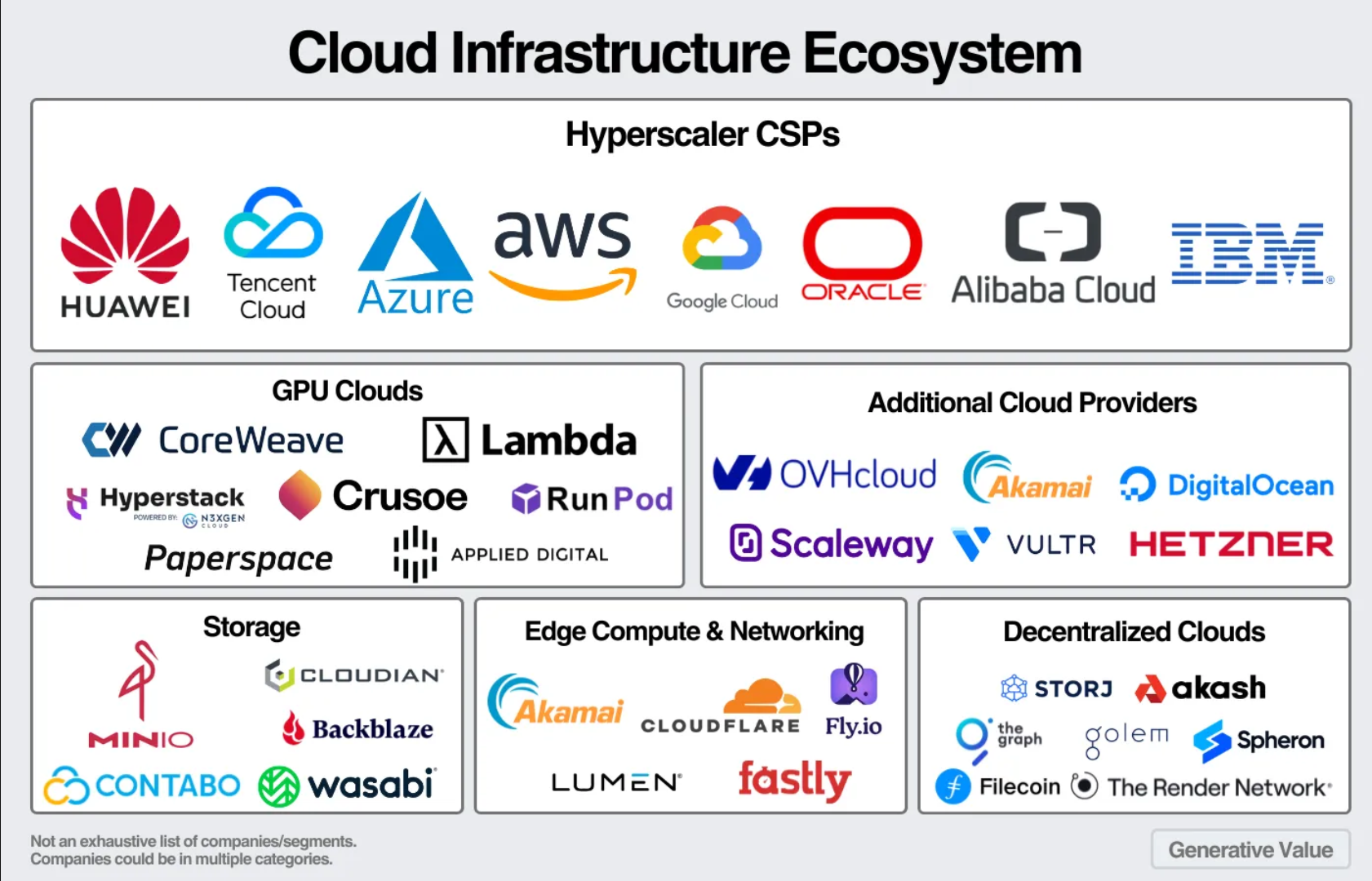

The options potentially available to customers interested in cloud services are:

- Storage Clouds, focused on providing efficient storage solutions.

- GPU Clouds, tailored for AI workloads requiring high computational power.

- Edge Compute Clouds, which offer localized computing services closer to end-users for lower latency.

- Decentralized Clouds, using blockchain to pool and rent out distributed computing resources. In other words, what has been dubbed in the crypto industry as DePiN, Decentralized Physical Infrastructure Network.

Figure 12: Cloud Infra Ecosystem

Source: Generative Value – An overview on Cloud history, tech and market.

While most companies still rely on hyperscalers, emerging models like Decentralized Physical Infrastructure Networks (DePIN) are gaining traction. DePIN leverages blockchain to pool and rent out distributed computing resources, offering a decentralized alternative to traditional cloud models, which embody a winner-takes-all environment showcasing a large concentration of power in a couple of parties that take the majority of the market share.

Figure 13: Worldwide Cloud Market Share

Source: Statista.com

Democratizing Access To GPUs in the Era of AI

GPUs have become essential in AI, providing the parallel processing power needed for complex computations. Unlike CPUs, which handle tasks sequentially, GPUs are designed for massive parallelism, making them ideal for AI workloads like deep learning. Key components include CUDA cores for parallel processing and advanced memory technologies like HBM2, enabling efficient handling of large datasets. CPUs and GPUs are basically designed to handle different types of tasks:

- CPUs are like highly skilled multitaskers, capable of handling a few complex jobs at once, such as running an entire operating system or managing multiple software applications. They are excellent for tasks like managing databases or running office applications.

- GPUs, in contrast, are like specialized workers in a factory, each one performing a small, repetitive task. This specialization makes GPUs much faster at processing large amounts of data in parallel, which is essential for AI tasks like training a model to understand speech or process video footage.

NVIDIA’s advancements, such as Tensor Cores and NVLink, have significantly enhanced GPU performance, with GPUs now crucial for training large AI models like GPT-4. The rise of GPUs has transformed AI, reducing training times from weeks to hours and improving model accuracy.

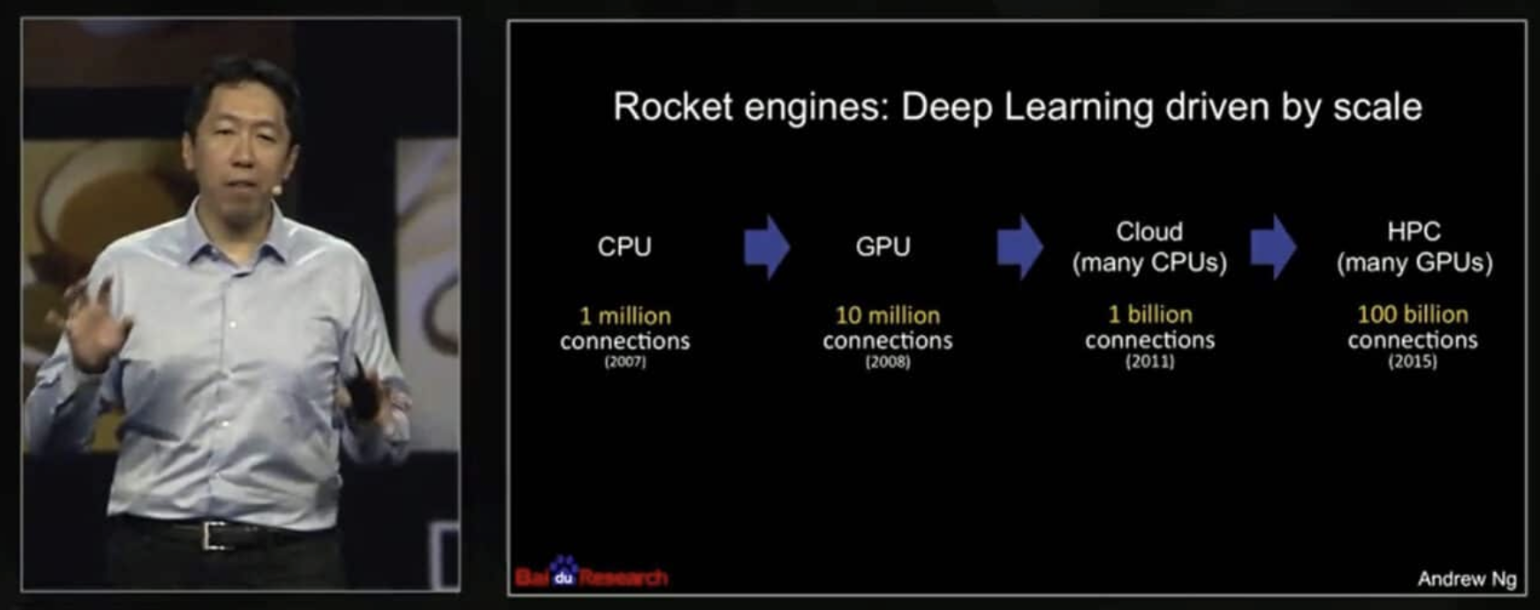

Figure 14: The Evolution of Deep Learning: Scaling From CPUs to HPC through many GPUs)

Source: NVIDIA GTC 2015

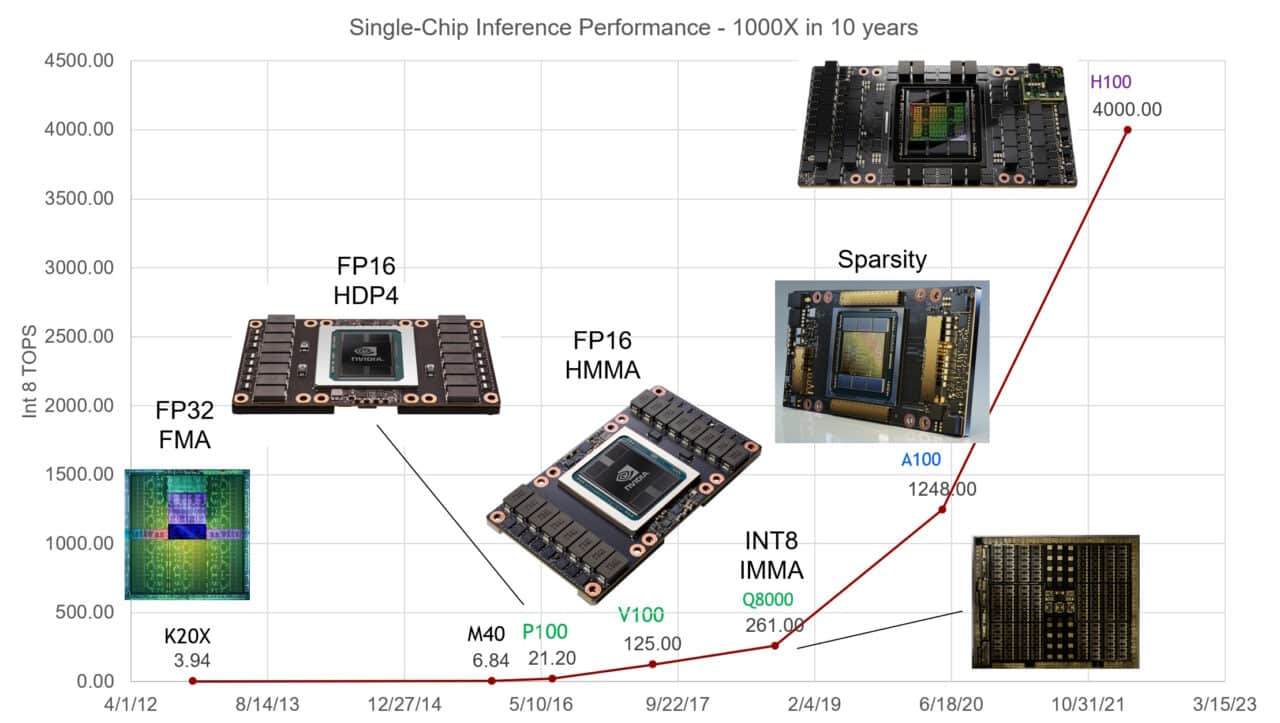

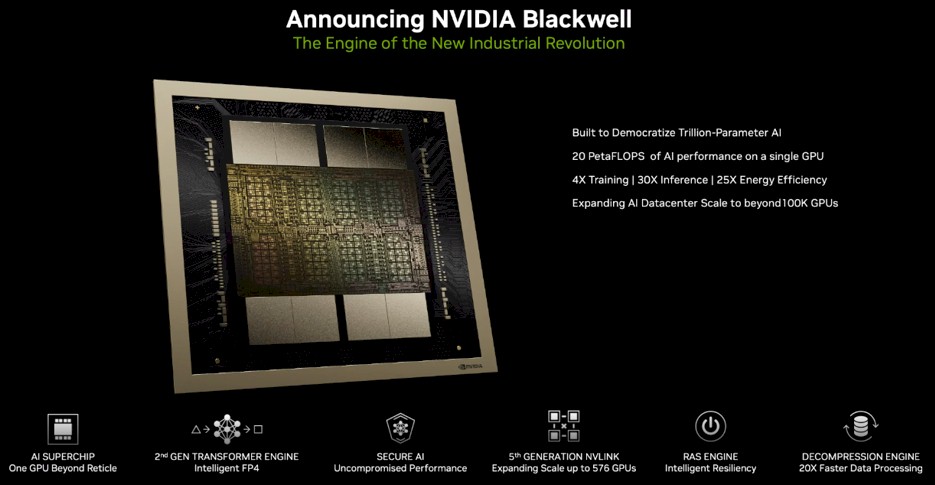

Since the introduction of CUDA in 2007, the GPU software stack has followed an exponential evolutionary path, with tools like cuDNN and NVIDIA NeMo supporting AI development. NVIDIA’s GPUs consistently lead in AI performance benchmarks, driving innovation across industries. The AI sector, now the largest consumer of GPUs, demands powerful chipsets like NVIDIA’s H100 and the upcoming Blackwell platform, which offers substantial improvements in performance and energy efficiency.

GPUs have driven significant advancements in AI across various domains. such as:

- Generative AI: Large language models like GPT-4, which power services used by millions, rely on thousands of GPUs for training and inference. This has enabled rapid advancements in natural language processing and other AI applications.

- Scientific and Industrial Impact: AI-powered by GPUs is expected to have a massive economic impact, potentially adding $2.6 trillion to $4.4 trillion annually across industries like banking, healthcare, and retail, according to a McKinsey report. NVIDIA GPUs are also being used to address global challenges, such as climate change, by accelerating AI models that help reduce carbon emissions.

The AI sector has rapidly become the largest consumer of GPUs, driven by the need for immense computational power that CPUs alone cannot provide. AI workloads, such as training large language models (LLMs), machine learning, and deep learning, demand the high-performance capabilities of advanced GPU chipsets like NVIDIA’s H100, which are essential for processing complex datasets and executing sophisticated AI operations.

Figure 15: The Exponential Growth of Single-Chip Inference Performance

Source: NVIDIA’s Official Blog

Most recently, the introduction of NVIDIA’s Blackwell platform has marked the beginning of a new era in AI, with its architecture offering up to 25x reductions in cost and energy consumption. This platform supports the processing of large AI models, democratizing access to advanced AI capabilities. As AI workloads grow more demanding, the role of GPUs in enabling high-performance computing will only increase, driving further advancements in the field.

Figure 16: Blackwell, the new GPU Architecture Developed by NVIDIA

Source: Bios, Introducing the NVIDIA Blackwell

Furthermore, the integration of fifth-generation NVLink technology ensures seamless, high-speed communication across multiple GPUs, allowing for efficient scaling of AI models in data centers and cloud environments. The GB200 Grace Blackwell Superchip exemplifies the convergence of AI and high-performance computing, providing a versatile and powerful toolset for enterprises looking to integrate AI into their operations.

Market Growth and Positioning

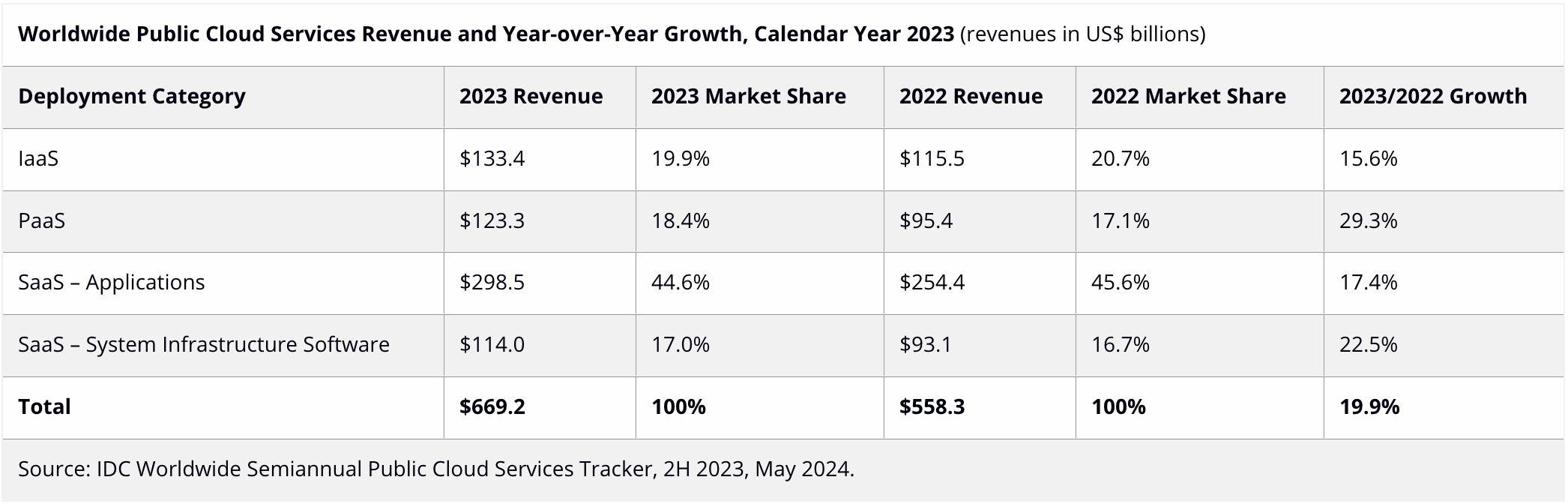

In 2023, the global public cloud services market reached $669.2 billion, a 19.9% increase from the previous year, according to IDC. The Software as a Service – Applications (SaaS – Applications) segment led the market, contributing nearly 45% of the total revenue. Infrastructure as a Service (IaaS) followed, accounting for 19.9%, while Platform as a Service (PaaS) and Software as a Service – System Infrastructure Software (SaaS – SIS) made up 18.4% and 17.0%, respectively. PaaS and SaaS – SIS were the fastest-growing segments, reflecting the rising demand for scalable cloud solutions.

Figure 17: Worldwide Public Cloud Services Revenue and YoY Growth, 2023

Source: IDC

According to data published by Synergy Research Group, Amazon Web Services (AWS) held a 31% market share, generating $24.2 billion in quarterly revenue. However, AWS’s growth slowed to 13% annually, impacted by reduced tech spending. In contrast, Microsoft and Google Cloud saw higher growth rates of 30% and 26%, respectively. Chinese providers Alibaba Cloud and Tencent Cloud held a combined 5% of the global market.

Figure 18: Worldwide Cloud Computing Market Share

Source: Visual Capitalist

The surge in AI and machine learning has significantly boosted demand for cloud services, particularly high-performance GPUs needed for training large-scale AI models. This trend presents a substantial opportunity for cloud providers as various industries increasingly rely on cloud platforms for AI deployments.

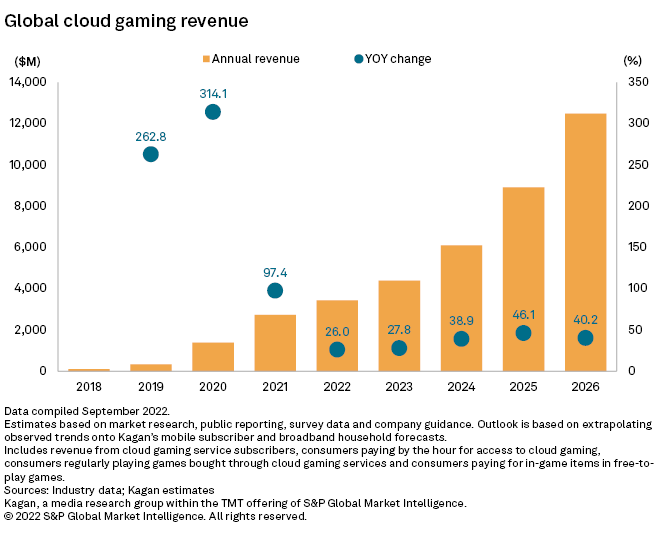

Cloud gaming, valued at over $5 billion in 2023 with a 37% CAGR (according to information collected by GreyViews), is another high-growth area that these platforms are targeting. Cloud gaming’s reliance on high-performance GPUs for smooth, low-latency gameplay aligns with DePIN GPU networks, offering scalable and cost-effective infrastructure solutions.

Figure 19: Global Cloud Gaming Revenues

Source: S&P Global

As the cloud landscape evolves, U.S. firms dominate the market, but the increasing reliance on cloud infrastructure for AI presents new opportunities. Nearly 98% of companies globally now rely on cloud services for at least some of their business applications. This widespread adoption, coupled with the rapid growth in AI, points to a sustained and escalating demand for cloud-based computational power.

DePIN is a market sector that is strategically poised to capture significant market share in this expanding landscape, particularly within the AI and machine learning sectors, which require scalable GPU resources for both training and inference. Traditional cloud GPU providers face challenges related to high costs and shortages of compute resources, but a decentralized model that leverages idle and underutilized enterprise GPU power can offer a more cost-effective solution for both startups and established enterprises.

Challenges of Centralized Cloud Infrastructure

The vertical growth of the cloud infrastructure sector, the high cost of deploying data servers and maintaining them, and the increasingly voracious demand for data storage and computing power services, have contributed to the creation of an oligopolistic market that stifles innovation (because it prevents competition), polarizes risks, has almost unbreakable barriers to entry, and raises several ethical issues, from data security to the limitation of consumer choice through vendor lock-in to unequal bargaining power over the definition of terms of service and privacy policies

High Costs and Limited Bargaining Power

Centralized cloud providers like AWS, Azure, and Google Cloud control pricing, often imposing high costs on users. This lack of competition limits market diversity and stifles innovation, particularly affecting SMEs and startups that lack the financial power to negotiate better terms.

Resource Wastage

Centralized cloud services often lead to inefficient resource utilization. Many enterprises purchase more cloud resources than needed, resulting in significant waste, up to 27% of cloud budgets as of 2024. This inefficiency translates into billions of dollars lost annually.

Figure 20: Estimated Wasted Cloud Spend on IaaS and PaaS

Source: Flexera

Lack of Flexibility and Customization

Centralized cloud services often offer standardized solutions that may not cater to the specific needs of all businesses. This lack of flexibility can limit how effectively organizations can deploy and optimize their AI workloads, forcing them to adapt their processes to fit the cloud provider’s offerings rather than the other way around.

Data Sovereignty and Privacy Concerns

Centralized cloud providers often store data in specific regions, which can lead to concerns over data sovereignty and privacy, especially when data crosses international borders. Governments and organizations might face challenges in ensuring compliance with local data protection regulations, leading to legal and ethical risks.

Ethical AI and Machine Learning:

Deploying AI at scale through centralized cloud providers raises ethical concerns, such as potential biases in AI models and the need for transparency in algorithmic decisions. These issues necessitate careful consideration of the broader impacts of AI deployment.

Market Dependency and Lock-In

Businesses relying heavily on a single cloud provider risk becoming dependent on that provider’s ecosystem, leading to potential vendor lock-in. This dependency can make it difficult and costly to switch providers or diversify services, limiting business agility and increasing long-term costs.

Vulnerability to Single Points of Failure

Centralized infrastructure is more susceptible to disruptions caused by outages or cyber-attacks. If a major cloud provider experiences downtime, it can affect a vast number of businesses globally, leading to significant financial losses and operational disruptions.

Decentralized Innovation Hurdles

The dominance of centralized providers can hinder the growth and adoption of decentralized models like DePIN (Decentralized Physical Infrastructure Networks), which offer more open, competitive, and resource-efficient alternatives. These models could provide better accessibility and affordability for smaller players but struggle to gain traction in a market controlled by a handful of hyperscalers.

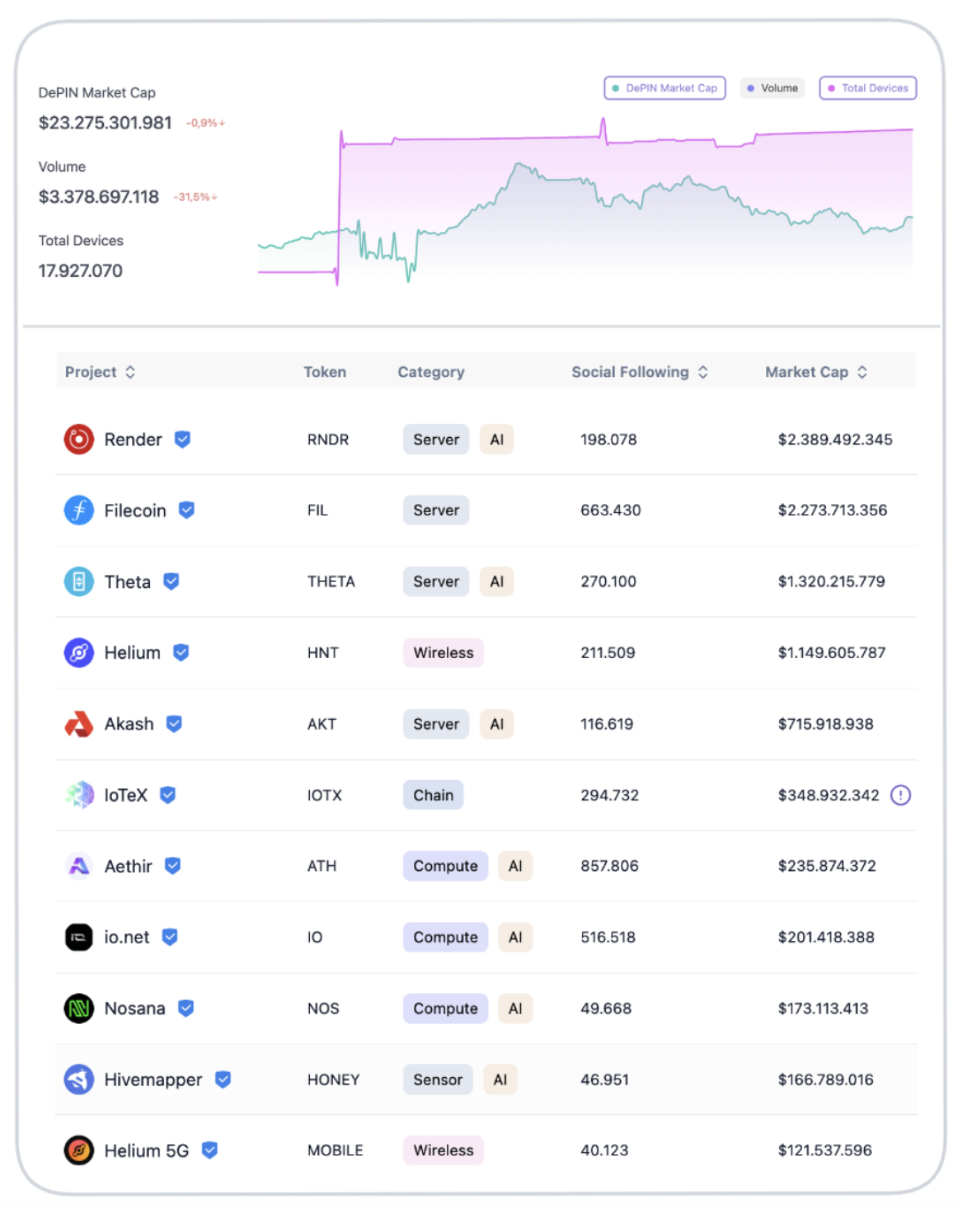

The Vertical Growth of an Alternative: DePIN

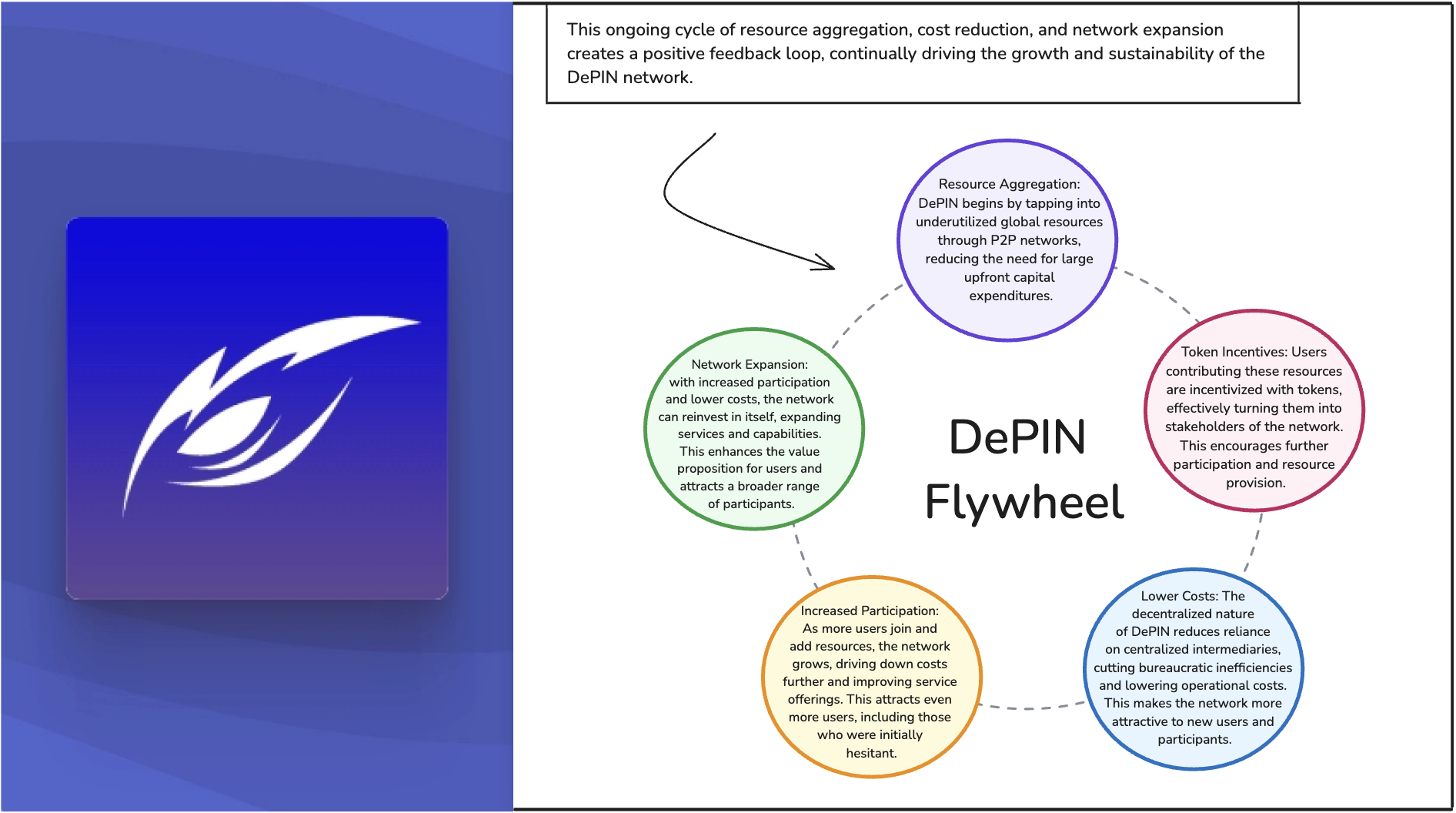

DePIN, or Decentralized Physical Infrastructure Networks, represents a transformative approach to building and managing critical infrastructure. Unlike traditional models dominated by centralized giant corporations, DePIN leverages a decentralized network of individual contributors who provide resources such as storage, computing power, energy, and communication bandwidth. These contributors are incentivized through token rewards, creating a global, decentralized infrastructure ecosystem.

This paradigm shift actually offers many benefits:

Decentralization of Infrastructure

DePIN shifts control from centralized giants to a distributed network of individual providers. This democratization reduces reliance on large corporations, fostering a more open and resilient infrastructure system. Like decentralized finance (DeFi) transformed financial systems, DePIN decentralizes infrastructure management, empowering individuals to collectively build and maintain critical services.

Horizontal Scalability

DePIN networks scale by adding resources as needed, offering flexible, real-time adjustment to demand. This horizontal scalability allows for potentially infinite growth, efficiently managing resources through blockchain control and allocation.

Market Impact and Scalability

The DePIN sector is rapidly growing, with projects like Hivemapper, Render, Helium, and Io.net leading the way. DePIN’s ability to scale horizontally provides a competitive edge, enabling it to meet rising demands in various sectors, including AI and high-performance computing.

Figure 21: List of The Most Capitalized DePIN Projects

Source: DePiN Scan

DePIN allows for horizontal scalability, where networks can flexibly expand resources based on demand. This flexibility enables DePIN systems to scale up or down without significant shifts, potentially allowing for infinite scalability depending on the availability of dormant resources.

Community Control and Fair Pricing

In DePIN, network control is distributed among participants, similar to miners in Proof-of-Work networks. This decentralized control ensures fair and transparent pricing, avoiding the inflation seen in centralized models, and making services more affordable and accessible.

Cost-Efficient Operation

DePIN operates with minimal overhead, as infrastructure is provided by a distributed network of contributors. This reduces costs and allows providers to optimize resource allocation across multiple networks, offering high-quality services at lower prices.

Permissionless Participation and Incentivization

DePINs are open and permissionless, allowing anyone with resources to join as a provider and access services without complex negotiations. Token incentives drive participation, creating a flywheel effect that enhances network growth and resource sharing.

- Figure 22: DePiN Flywheel

Source: Revelo Intel

Source: Revelo Intel

Enhanced Scalability and Resource Availability

By leveraging a globally distributed network, DePIN ensures resources are readily available and scalable, effectively addressing resource scarcity and supporting computationally intensive tasks.

Resilience and Reliability

DePIN’s decentralized nature mitigates security risks by distributing data and compute tasks across a wide network, reducing the threat of cyberattacks and enhancing privacy. By eliminating single points of failure, DePIN enhances system resilience. Distributed workloads ensure continuous operation even if some nodes face issues, providing consistent performance and high availability.

Cost-Efficiency and Resource Optimization

DePIN offers flexible pricing driven by competitive markets and dynamic resource allocation, reducing costs and improving resource utilization compared to centralized providers. With customizable computational resources tailored to the specific needs of workloads, DePIN platforms can provide a more adaptable infrastructure for diverse applications.

Democratization of Access to High-Performance Computing

DePIN lowers barriers to high-performance computing, making powerful resources like GPUs accessible to startups, small businesses, and individual developers, fostering innovation and inclusivity.

Enhanced Integration with Web3 and Token Economies

DePIN integrates with Web3 technologies, using tokens as a medium of exchange for computational resources and leveraging smart contracts for transparent resource management and reward distribution.

The Challenge of Balancing Supply and Demand

The DePIN (Decentralized Physical Infrastructure Networks) sector relies on token incentives to attract hardware suppliers, particularly those with GPUs, to join the network. However, the viability and attractiveness of any DePIN project are closely tied to how well it balances supply and demand, particularly in the context of volatile token prices.

DePIN projects typically use native tokens to incentivize hardware suppliers, which can lead to significant challenges due to token price volatility. Suppliers must evaluate how quickly they can recover their hardware investment through these tokens, but this becomes complex and risky when token prices fluctuate widely and suddenly. The viability of this model depends on delivering a stable and predictable ROI for suppliers, so if token prices plummet, it can extend the time needed for suppliers to break even, potentially reducing the attractiveness of participating in the network.

Many DePIN projects focus on attracting suppliers with token incentives but fail to equally incentivize demand. This imbalance can lead to unused resources and lower token values where abundant supply is met with insufficient demand. To succeed, DePIN projects must foster robust demand for their resources. The critical question is whether there is sufficient buyer interest in these tokens and what they are being used for.

Despite possibly cheaper costs, DePIN networks frequently face trust and dependability difficulties. Unlike established cloud providers such as AWS or Google Cloud, decentralized platforms may not provide continuous maintenance, stability, or support. Business enterprises may be hesitant to implement DePIN services due to these issues, regardless of the cost benefits.

The main challenge for DePIN projects is not just attracting hardware suppliers through token incentives but also creating and sustaining demand for their physical resources. Without addressing the demand-side issues, these platforms risk remaining underutilized, regardless of how cost-effective they might appear. To succeed, DePIN projects must build trust, ensure operational reliability, and stabilize their token economies to foster a balanced and sustainable ecosystem.

Conclusion

As we advance into a new era shaped by the expanding reach of cloud computing, Decentralized Physical Infrastructure Networks are emerging as a revolutionary force, challenging the traditional centralized models that have long underpinned digital infrastructure. DePIN basically reimagines the distribution and utilization of computational resources, creating a more resilient, cost-efficient, and inclusive ecosystem. By tapping into the huge potential of underutilized (or even idle) global resources, this field paves the way for a new paradigm in cloud computing that prioritizes democratized access and decentralized control.

However, the success of DePIN is not without its challenges. The balance between supply and demand, the stability of token economics, and the establishment of trust and reliability within these decentralized networks are all critical factors that will determine the future viability of this groundbreaking but fledgling model. As the market continues to evolve, the ability of DePIN projects to address these challenges and capitalize on the growing demand for scalable, high-performance computing will be key to their long-term success.

We can therefore conclude by saying that while DePIN offers an exciting and potentially game-changing approach to cloud infrastructure, its ultimate impact will depend on the careful navigation of the complex dynamics at play within this rapidly expanding sector.

The future may indeed be decentralized, but it will require strategic foresight, robust and high-performing technological frameworks, and a deep understanding of market needs by the whole industry to realize its full hidden potential.

References

“Unraveling the DePIN Sector (2023)”, Ryze Labs, published on Substack. Link

“Let’s protect our online privacy by decentralized cloud services” (2022), Marcel Ohrenschall, published on Substack. Link

“Cost efficiency of cloud computing. Centralized vs Decentralized networks” (2023), Marcel Ohrenschall, published on Substack. Link

“A Primer on the Cloud” (2024), Generative Value, published on Substack. Link

“An Overview of the Semiconductor Industry” (2023), Generative Value, published on Substack. Link

“The World’s Biggest Cloud Computing Service Providers” (2024), Visual Capitalist. Link

“The Case for Compute DePINs” (2024), Shoal Research, published on Substack. Link

“Navigating the High Cost of AI Compute” (2023), a16z, published on its official blog. Link

“State of the Cloud report” (2024), Flexera. Link

NVIDIA’s Official Blog. Link

“Public vs Private vs Hybrid: Cloud Differences Explained” (2020), Bmc’s official blog. Link

“A Framework for Software-as-a-Service Selection and Provisioning (2013)”, published by Elarbi Badidi on Research Gate. Link

MongoDB, “Cloud explained” section. Link

“Cloud market share 2024 – AWS, Azure, GCP growth fueled by AI”, Holori. Link

“State of DePIN” (2023), Messari. Link

“Cloud Market Gets its Mojo Back; AI Helps Push Q4 Increase in Cloud Spending to New Highs” (2024), Synergy Research Group. Link

“How Many Companies Use Cloud Computing in 2024?”, Edge Delta. Link

Disclosures

Revelo Intel has never had a commercial relationship with any of the projects listed above and this report was not paid for or commissioned in any way. Members of the Revelo Intel team, including those directly involved in the analysis above, may have positions in the tokens discussed. This content is provided for educational purposes only and does not constitute financial or investment advice. You should do your own research and only invest what you can afford to lose. Revelo Intel is a research platform and not an investment or financial advisor.